Photo by Christopher Gower on Unsplash

Why MLops is the real machine learning

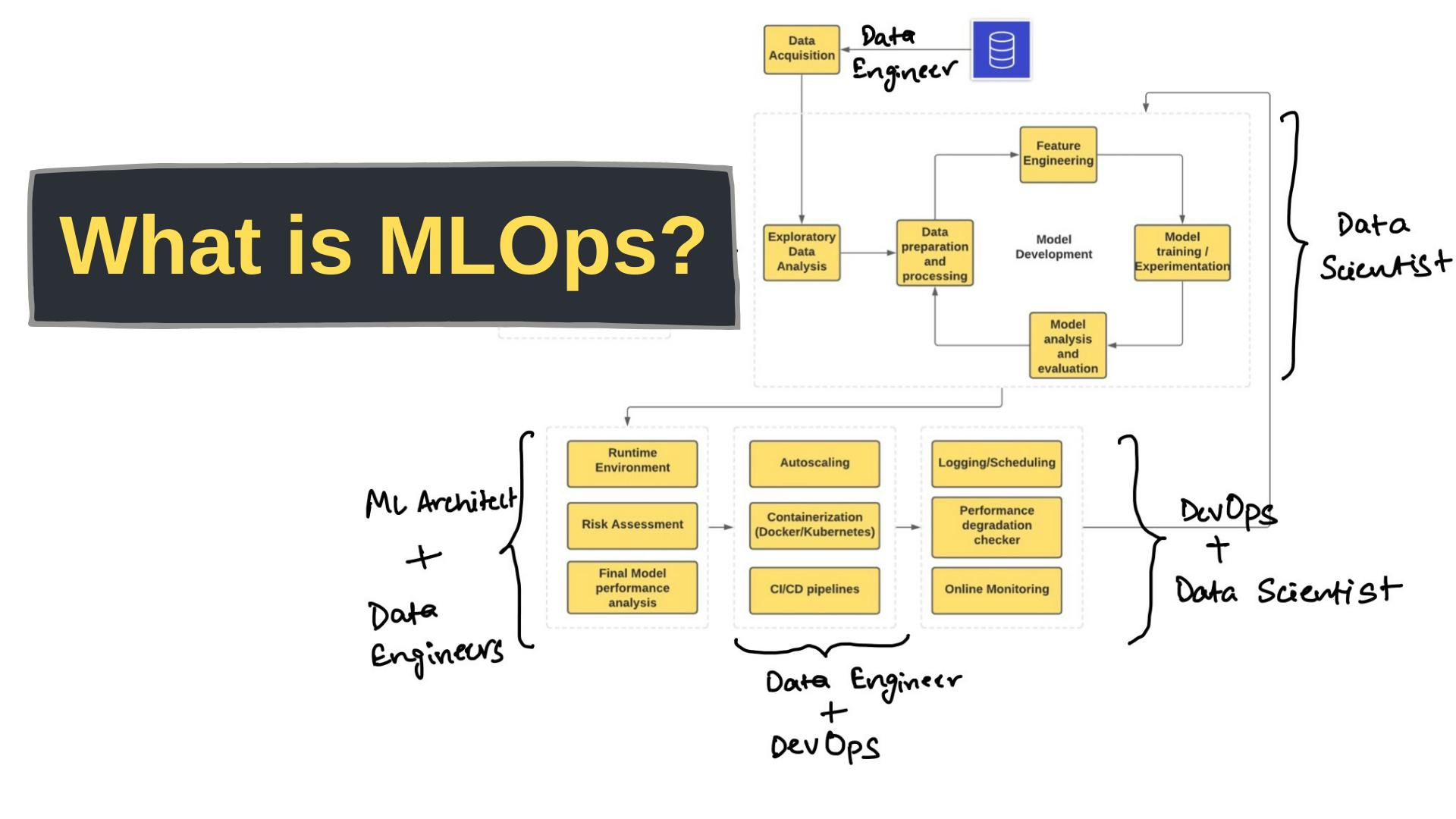

Building scalable ML applications require good knowledge of MLops, which requires a good understanding of the ML workflow

After learning all the important ML algorithms and applying them to datasets, it does make us feel accomplished. The actual goal of any machine learning project should be to transform the model into a working application that can be utilized by many people. This requires proper planning of the operations that will help us prepare and deploy a solid application that is scalable and can be monitored regularly for changes in performance.

Operations in ML workflow

It is important for any ML application to go through the following stages

Data Preparation - Data is collected from different sources and processed. This step is very important as unclean data can directly affect the performance of the model. Therefore, several data preprocessing techniques are applied before using the data for model training.

Model Training - Machine learning models are selected based on the data we have processed. Different data distributions require different algorithms and an appropriate model should be selected for the data.

Model Evaluation - After training the model of a particular algorithm, it is important to check whether the model is performing well. Techniques like cross-validation, A/B testing etc. are used to evaluate models.

Model Deployment - Once the model is trained and evaluated, it needs to be packaged for usage in an application. The application can then be deployed to production for end-users to interact with it.

Model Monitoring - Since the data can change over time, a model needs to be updated on time to meet the requirements of such data drifts. For this, we need to monitor the performance or use some other metric to catch such changes and maintain the model's performance.

Optimization and automation of the ML workflow

Since there are so many operations involved in the development of an ML application, it is always helpful to have tools that automate such a workflow. Our goal for automating the ML workflow is to have

Efficiency - Automating and optimizing the task associated with MLops can help save a lot of time and also reduce errors since all tasks are managed by MLops software.

Scalability - Automation of the workflow allows individuals/ companies to work on multiple models and large datasets. Scalability also comes with web technologies and containerization tools.

There are different tools for automating the ML workflow, but some of the popular ones are

Jenkins - It is an open-source automation server, which can be used to automate tasks like building, testing and deploying ML models.

Kubernetes - It is a container-orchestration platform that can be used to scale ML applications and automate deployment operations. This can be used with a containerization platform Docker that can help create lightweight containers for Kubernetes to manage them.

Python is a very popular programming language for Machine learning use cases. The ability to write Python scripts and create Python helper functions eases the process even further. The reason behind this is that all of the tools that we require for our ML workflow are utilized through Python scripts, therefore it is a highly important skill that one should master.

Monitoring of ML applications

Monitoring of ML applications is an important aspect of Mlops and it is used to track the performance of the model along with different issues that may arise. There should be some metric that helps decide that our application is underperforming. Some of the common detectors/tools used for monitoring purposes are

Model performance - We can decide the model performance based on its accuracy of predictions, response time etc.

Model Drift - Since data and its distribution change over time, our model may not be able to predict or even fit the current data scenario. Feature drift is also an important aspect of data drift and causes problems in the model's performance over time.

Model Versioning - Different versions of a model and the data used to train them.

Fortunately, we have got a lot of tools to manage such processes. Some popular ones are

TensorBoard - It is a web-based model monitoring tool. It allows monitoring of the model's performance as well as checks for model drift.

Prometheus - It is an open-source tool that provides alerts and metrics to monitor the performance of machine learning models.

Conclusion

Understanding MLops is the key to becoming a great machine learning engineer and involving real-world applications in your machine learning journey. Big tech companies use MLops strategies in their products to provide the best of services to their end users as well as update and maintain their infrastructure for the future.

So, that's it for the article. I know that there are a lot of tools for us to learn to work with, but through different projects and articles, we can increase our knowledge. The article that I read for the knowledge I gained is linked here.

If you have reached the end of this article, then I feel most obliged. Hope my article has brought something of value to you ;). Feel free to like or not like this article. And pls share your thoughts as comments, if you have any.

If you are a fan of newsletters, you can subscribe to my newsletter, given below.

You can also follow me on Hashnode and other platforms if you like my writing.

Help your friends out by sharing this article. Cheers!